A Student's Guide to Software Engineering Tools & Techniques »

An Introduction to GPGPU

Authors: Pierce Anderson Fu, Nguyen Quoc Bao

§ 1. GPGPU

§ 1.1 What is GPGPU?

GPGPU stands for General-purpose computing on graphics processing units. It is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the central processing unit (CPU).[1]

Simply put, it's a kind of parallel processing where we're trying to exploit the data-parallel hardware on GPUs to improve the throughput of our computers.

§ 1.2 Why Bother With Parallel Processing?

Moore's law is the observation made by Gordon Moore that the density of transistors in an integrated circuit board doubles approximately every two years. It has long been co-opted by the semiconductor industry as a target, and consumers have taken this growth for granted.

Because it suggests exponential growth, it is unsustainable and it cannot be expected to continue indefinitely. In the words of Moore himself, "It can't continue forever.".[2] There are hard physical limits to this scaling such as heat dissipation rate[3] and size of microprocessor features.[4]

As software engineers, this means that free and regular performance gains can no longer be expected.[5] To fully exploit CPU throughput gains, we need to code differently.

§ 1.3 Aren't Multicore CPUs Enough?

Between CPUs and GPUs, there are differences in scale and architecture.

- In terms of scale, CPUs only have several cores while GPUs house up to thousands of cores.

- In terms of architecture, CPUs are designed to handle sequential processing and branches effectively, while GPUs excel at performing simpler computations on large amounts of data.

This means that CPUs and GPUs excel at different tasks. You'll typically want to utilize GPGPU on tasks that are data parallel and compute intensive (e.g. graphics, matrix operations).

Definitions:

Data parallelism refers to how a processor executes the same operation on different data elements simultaneously.

Compute intensive refers to how the algorithm will have to process lots of data elements.

§ 1.4 What are the Challenges With GPGPU?

Not all problems are inherently parallelizable.

The SIMT (Single Instruction, Multiple Threads) architecture of GPUs means that they don't handle branches and inter-thread communication well.

§ 1.5 Implementations

- CUDA: Official website

- OpenCL: Official website

§ 1.6 GPGPU in Action

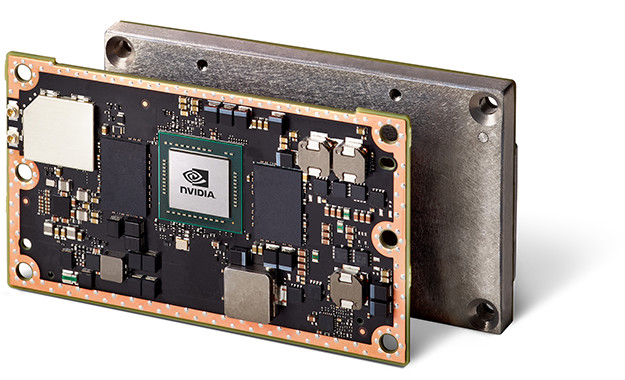

The benefits of GPGPU are even more pronounced when it comes to embedded systems and Internet of Things (IoT) applications, where computing power is often compromised for physical space, weight, and power consumption. For instance, NVIDIA's Jetson TX2, a computation processor board that delivers the processing capability of the Pascal GPU architecture in a package the size of a business card[6]. The Pascal architecture is used in many desktop computers, data centres, and supercomputers[7]. Along with its small form factor, this makes the Jetson ideal for embedded systems that require intensive processing power.

NVIDIA Jetson TX2 embedded system-on-module with Thermal Transfer Plate (TTP)

Packed with a NVIDIA Pascal GPU with 256 CUDA cores at maximally 1300 MHz[6], the TX2 is capable of intensive parallel computational tasks such as real-time vision processing or deploying deep neural networks, allowing mobile platforms to solve complex, real-world problems.

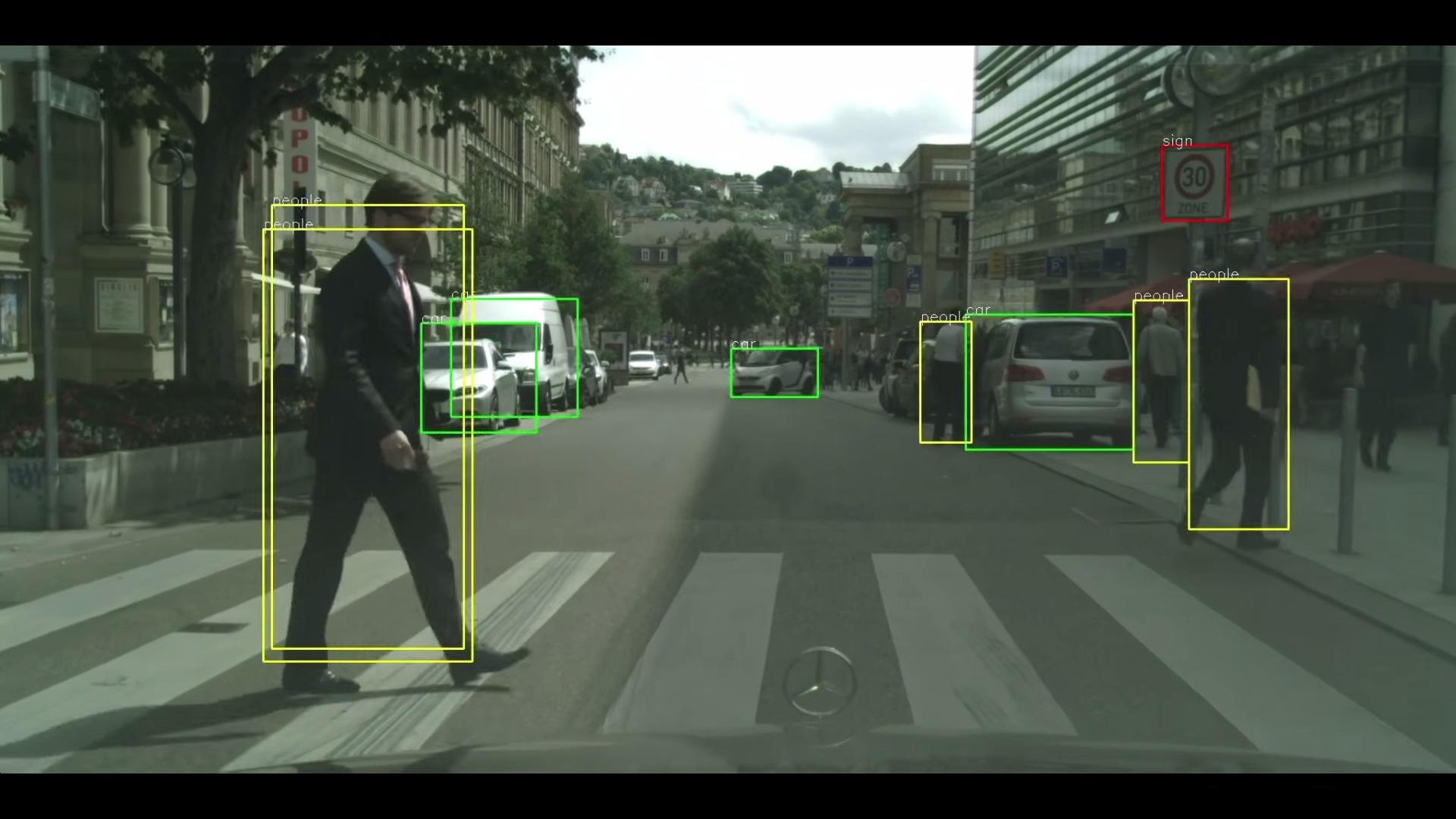

CES 2016: NVIDIA DRIVENet Demo - Visualizing a Self-Driving Future

Compatible with a range of components and large ecosystem products[8], the Jetson platform is now widely used for deploying vision and neural network processing onboard mobile platforms like self-driving cars, autonomous robots, drones, IoT, handheld medical devices, etc[9].

§ 2. Further Readings

- How concurrency is the next big change in software development since OO

- Official CUDA C programming guide: What GPUs excel at processing, and why

- Official CUDA C programming guide: Architecture of NVIDIA GPUs

- Lightning talk slides: An Introduction to GPGPU

- se-edu's learning resource on CUDA

§ 3. References

[1]: https://en.wikipedia.org/wiki/General-purpose_computing_on_graphics_processing_units

[2]: https://www.techworld.com/news/operating-systems/moores-law-is-dead-says-gordon-moore-3576581/

[3]: https://theory.physics.lehigh.edu/rotkin/newdata/mypreprs/spie-09b.pdf

[4]: https://arstechnica.com/gadgets/2016/07/itrs-roadmap-2021-moores-law/

[5]: http://www.gotw.ca/publications/concurrency-ddj.htm

[6]: https://www.nvidia.com/en-us/autonomous-machines/embedded-systems-dev-kits-modules/

[7]: https://en.wikipedia.org/wiki/Tegra#Tegra_X1/

[8]: https://elinux.org/Jetson_TX2#Ecosystem_Products

[9]: https://developer.nvidia.com/embedded/learn/success-stories